Author Archives: Łukasz Bolikowski

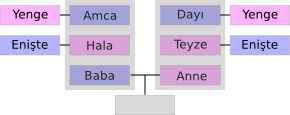

Turkish kinship vocabulary: Amca, Dayı, Enişte, Hala, Teyze, Yenge

Turkish kinship vocabulary: Amca, Dayı, Enişte, Hala, Teyze, Yenge

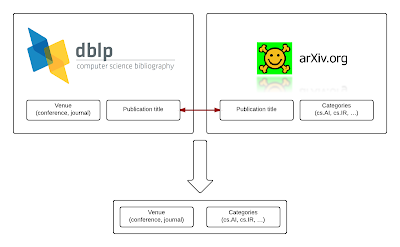

Tagging CS journals and conferences with arXiv subject areas

Data at hand

Merging

Summary

Open code, open data

Tagging CS journals and conferences with arXiv subject areas

Data at hand

Merging

Summary

Open code, open data

Writing research papers can be a tiny bit easier

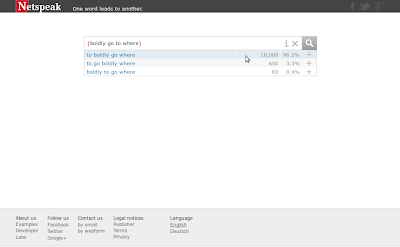

Recently I came across two useful web services that make it a bit easier to write research papers: Netspeak and Detexify².

As a non-native English speaker, I often have problems with choosing the right words and I used to ask Google to help me. For example, I would formulate a query “our research * that”, look for the most frequent words in the search results, and issue additional queries like “our research indicates that” and “our research shows that” to count hits.

With Netspeak, it is easier, I simply write: our research ? that and I instantly get the most popular phrases with their counts. Netspeak can also find the most popular synonyms of a given word in a given context, or find the most frequent order of given words:

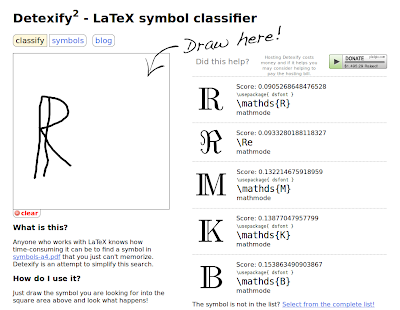

Detexify² solves another small inconvenience: when I didn’t remember the LaTeX instruction for a less-common math. symbol, I needed to consult looong lists of symbols and corresponding instructions. Now I can simply draw the symbol and Detexify² will tell me the instruction and the package which I need to use!

Interestingly, the back-end is written in Haskell, and its source code is available on GitHub.

Writing research papers can be a tiny bit easier

Recently I came across two useful web services that make it a bit easier to write research papers: Netspeak and Detexify².

As a non-native English speaker, I often have problems with choosing the right words and I used to ask Google to help me. For example, I would formulate a query “our research * that”, look for the most frequent words in the search results, and issue additional queries like “our research indicates that” and “our research shows that” to count hits.

With Netspeak, it is easier, I simply write: our research ? that and I instantly get the most popular phrases with their counts. Netspeak can also find the most popular synonyms of a given word in a given context, or find the most frequent order of given words:

Detexify² solves another small inconvenience: when I didn’t remember the LaTeX instruction for a less-common math. symbol, I needed to consult looong lists of symbols and corresponding instructions. Now I can simply draw the symbol and Detexify² will tell me the instruction and the package which I need to use!

Interestingly, the back-end is written in Haskell, and its source code is available on GitHub.

Typoglycemia in Haskell

Can you decipher the following sentences?All hmuan biegns are bron fere and euqal in dgiinty and rgiths. Tehy are ednwoed wtih raeosn and cnocseicne and sohlud act tworads one aonhter in a sipirt of borhtreohod.It’s the first article of the Universal D…

Typoglycemia in Haskell

Can you decipher the following sentences?All hmuan biegns are bron fere and euqal in dgiinty and rgiths. Tehy are ednwoed wtih raeosn and cnocseicne and sohlud act tworads one aonhter in a sipirt of borhtreohod.It’s the first article of the Universal D…

Assorted curiosities: Geography

Fun facts learned while clicking through Wikipedia:Treasure Island in Ontario, Canada is probably the largest island in a lake in an island in a lake.Liechtenstein and Uzbekistan are doubly landlocked countries, i.e., all the neighbouring countries are…

Assorted curiosities: Geography

Fun facts learned while clicking through Wikipedia:Treasure Island in Ontario, Canada is probably the largest island in a lake in an island in a lake.Liechtenstein and Uzbekistan are doubly landlocked countries, i.e., all the neighbouring countries are…