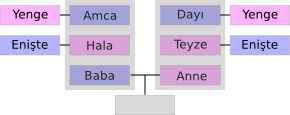

Turkish kinship vocabulary: Amca, Dayı, Enişte, Hala, Teyze, Yenge

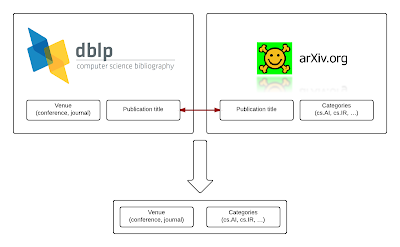

Tagging CS journals and conferences with arXiv subject areas

Data at hand

Merging

Summary

Open code, open data

Tagging CS journals and conferences with arXiv subject areas

Data at hand

Merging

Summary

Open code, open data

Package intergraph goes 2.0

Yesterday I submitted a new version (marked 2.0-0) of package ‘intergraph’ to CRAN. There are some major changes and bug fixes. Here is a summary:

- The package supports “igraph” objects created with ‘igraph’ version 0.6-0 and newer (vertex indexing starting from 1, not 0) only!

- Main functions for converting network data between object classes “igraph” and “network” are now called

asIgraphandasNetwork. - There is a generic function

asDFthat converts network object to a list of two data frames containing (1) edge list with edge attributes and (2) vertex database with vertex attributes - Functions

asNetworkandasIgraphallow for creating network objects from data frames (edgelists with edge attributes and vertex databases with vertex attributes).

I have written a short tutorial on using the package. It is available on package home page on R-Forge. Here is the direct link.

Usage experiences and bug reports are more than welcome.

Assorted links

Some assorted links collected this week:

- A new interestingly looking book “Web Social Science” by Robert Ackland coming out in July 2013.

- In recent issue of Nature (Match 28): a special on the future of scientific publishing.

- An interesting TEDtalk by Colin Camerer on neuroscience and experimental economics

- Nice paper analyzing world email traffic, co-authored by Michael Macy. Another example of using ‘igraph’ package for network analysis.

- Gary King and Stuart Shieber on Open Access science and publishing.

There are discussions in various places about merits, pitfalls, and misunderstandings related to buzzwords “bigdata”, “data science” (what a useless term it is…) etc., analysis being “data-driven” or “evidence-based” etc. Perhaps I will make a separate post on that at some point… For now:

- “Let the Data Speak for themselves”, a guest post by Joseph Rickert on Revolutions blog

- Echoes and comments of Nate Silver’s acclaimed book “The Signal and the Noise”, for example:

- Matt Asay on readwrite (hat tip to Dominik Batorski), and here

- at NYT

- David Brooks at NYT

- Petr Keil on data-driven science

Writing research papers can be a tiny bit easier

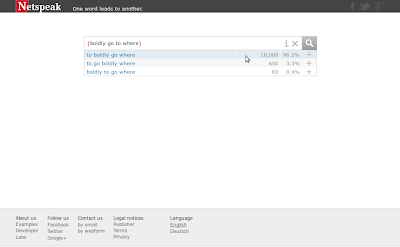

As a non-native English speaker, I often have problems with choosing the right words and I used to ask Google to help me. For example, I would formulate a query "our research * that", look for the most frequent words in the search results, and issue additional queries like "our research indicates that" and "our research shows that" to count hits.

With Netspeak, it is easier, I simply write: our research ? that and I instantly get the most popular phrases with their counts. Netspeak can also find the most popular synonyms of a given word in a given context, or find the most frequent order of given words:

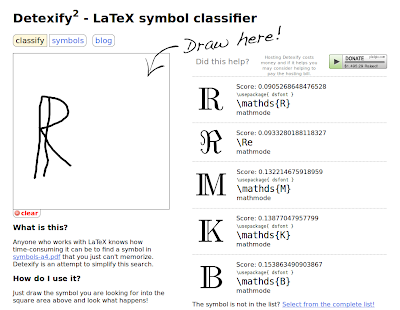

Detexify² solves another small inconvenience: when I didn't remember the LaTeX instruction for a less-common math. symbol, I needed to consult looong lists of symbols and corresponding instructions. Now I can simply draw the symbol and Detexify² will tell me the instruction and the package which I need to use!

Interestingly, the back-end is written in Haskell, and its source code is available on GitHub.

Writing research papers can be a tiny bit easier

As a non-native English speaker, I often have problems with choosing the right words and I used to ask Google to help me. For example, I would formulate a query "our research * that", look for the most frequent words in the search results, and issue additional queries like "our research indicates that" and "our research shows that" to count hits.

With Netspeak, it is easier, I simply write: our research ? that and I instantly get the most popular phrases with their counts. Netspeak can also find the most popular synonyms of a given word in a given context, or find the most frequent order of given words:

Detexify² solves another small inconvenience: when I didn't remember the LaTeX instruction for a less-common math. symbol, I needed to consult looong lists of symbols and corresponding instructions. Now I can simply draw the symbol and Detexify² will tell me the instruction and the package which I need to use!

Interestingly, the back-end is written in Haskell, and its source code is available on GitHub.

Correction to intergraph update

It turned out that I wrote the last post on “intergraph” package too hastily. After some feedback from CRAN maintainers and deliberation I decided to release the updated version of the “intergraph” package under the original name (so no new package “intergraph0″) with version number 1.2. This version relies on legacy “igraph” version 0.5, which is now called “igraph0″. Package “intergraph” 1.2 is now available on CRAN.

Meanwhile, I’m working on new version of “intergraph”, scheduled to be ver. 1.3, which will rely on new version 0.6 of “igraph”.

I am sorry for the mess.

Updates to package ‘intergraph’

On June 17 a new version (0.6) of package ”igraph” was released. This new version abandoned the old way of indexing graph vertices with consecutive numbers starting from 0. The new version now numbers the vertices starting from 1, which is more consistent with the general R convention of indexing vectors, matrices, etc. Because this change is not backward-compatible, there is now a separate package called “igraph0″ which still uses the old 0-convention.

These changes affect the package “intergraph“.

A new version of “intergraph” (ver 1.3) is being developed to be compatible with the new “igraph” 0.6. Until it is ready, there is now package “intergraph” version 1.2 available on CRAN, which still uses the old 0-convention. It relies on legacy version of “igraph” (version 0.5, now called “igraph0″ on CRAN).

To sum up:

- If you have code that still uses the old version of “igraph” (earlier than 0.6) you should load package “igraph0″ instead of “igraph”, and use package “intergraph” version 1.2.

- If you already started using the new version of “igraph” (0.6 or later), unfortunately you have to wait until a new version of “intergraph” (1.3) is released.

Edit

As I wrote in the next post, in the end there is no package “intergraph0″, just the new version 1.2. Consequently, I have edited the description above.